Tips

GVCs vs. Orgs

- A "GVC" roughly corresponds to a Heroku "app."

- Images are available at the org level.

- Multiple GVCs within an org can use the same image.

- You can have different images within a GVC and even within a workload. This flexibility is one of the key differences compared to Heroku apps.

RAM

Any workload replica that reaches the max memory is terminated and restarted. You can configure alerts for workload restarts and the percentage of memory used in the Control Plane UX.

Here are the steps for configuring an alert for the percentage of memory used:

- Navigate to the workload that you want to configure the alert for

- Click "Metrics" on the left menu to go to Grafana

- On Grafana, go to the alerting page by clicking on the alert icon in the sidebar

- Click on "New alert rule"

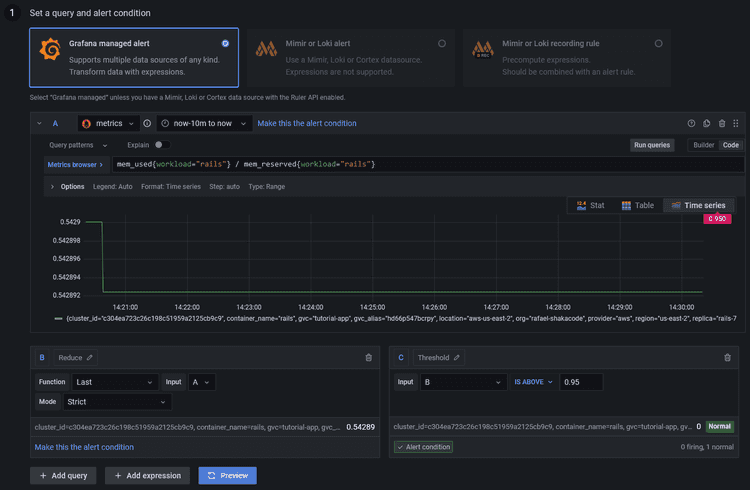

- In the "Set a query and alert condition" section, select "Grafana managed alert"

- There should be a default query named

A - Change the data source of the query to

metrics - Click "Code" on the top right of the query and enter

mem_used{workload="workload_name"} / mem_reserved{workload="workload_name"} * 100(replaceworkload_namewith the name of the workload) - There should be a default expression named

B, with the typeReduce, the functionLast, and the inputA(this ensures that we're getting the last data point of the query) - There should be a default expression named

C, with the typeThreshold, and the inputB(this is where you configure the condition for firing the alert, e.g.,IS ABOVE 95) - You can then preview the alert and check if it's firing or not based on the example time range of the query

- In the "Alert evaluation behavior" section, you can configure how often the alert should be evaluated and for how

long the condition should be true before firing (for example, you might want the alert only to be fired if the

percentage has been above

95for more than 20 seconds) - In the "Add details for your alert" section, fill out the name, folder, group, and summary for your alert

- In the "Notifications" section, you can configure a label for the alert if you're using a custom notification policy, but there should be a default root route for all alerts

- Once you're done, save and exit in the top right of the page

- Click "Contact points" on the top menu

- Edit the

grafana-default-emailcontact point and add the email where you want to receive notifications - You should now receive notifications for the alert in your email

The steps for configuring an alert for workload restarts are almost identical, but the code for the query would be

container_restarts.

For more information on Grafana alerts, see: https://grafana.com/docs/grafana/latest/alerting/

Remote IP

The actual remote IP of the workload container is in the 127.0.0.x network, so that will be the value of the

REMOTE_ADDR env var.

However, Control Plane additionally sets the x-forwarded-for and x-envoy-external-address headers (and others - see:

https://shakadocs.controlplane.com/concepts/security#headers). On Rails, the ActionDispatch::RemoteIp middleware should

pick those up and automatically populate request.remote_ip.

So REMOTE_ADDR should not be used directly, only request.remote_ip.

CI

Note: Docker builds much slower on Apple Silicon, so try configuring CI to build the images when using Apple hardware.

Make sure to create a profile on CI before running any cpln or cpflow commands.

CPLN_TOKEN=...

cpln profile create default --token ${CPLN_TOKEN}Also, log in to the Control Plane Docker repository if building and pushing an image.

cpln image docker-loginMemcached

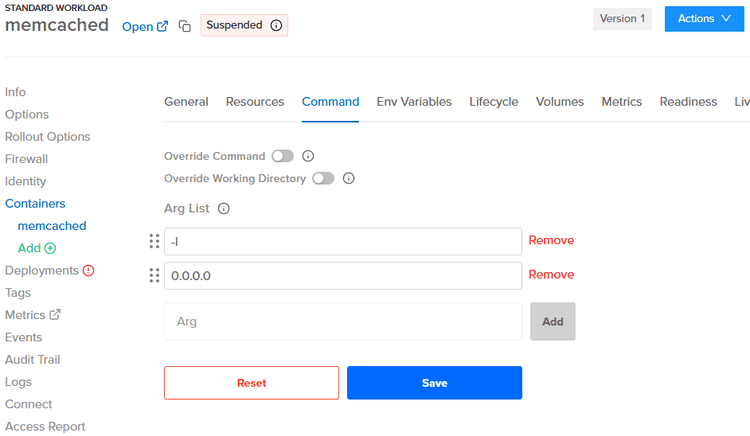

On the workload container for Memcached (using the memcached:alpine image), configure the command with the args

-l 0.0.0.0.

To do this:

- Navigate to the workload container for Memcached

- Click "Command" on the top menu

- Add the args and save

Sidekiq

Quieting Non-Critical Workers During Deployments

To avoid locks in migrations, we can quiet non-critical workers during deployments. Doing this early enough in the CI allows all workers to finish jobs gracefully before deploying the new image.

There's no need to unquiet the workers, as that will happen automatically after deploying the new image.

cpflow run 'rails runner "Sidekiq::ProcessSet.new.each { |w| w.quiet! unless w[%q(hostname)].start_with?(%q(criticalworker.)) }"' -a my-appSetting Up a Pre Stop Hook

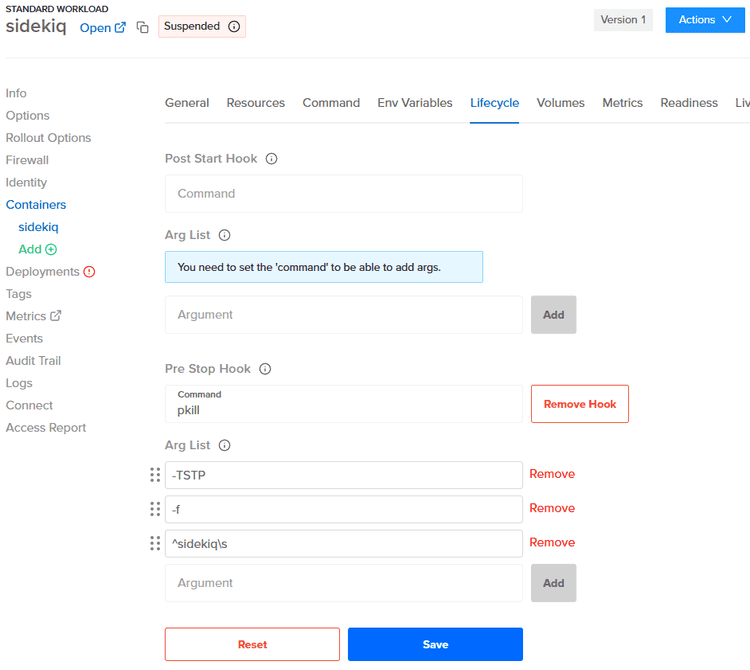

By setting up a pre stop hook in the lifecycle of the workload container for Sidekiq, which sends "QUIET" to the workers, we can ensure that all workers will finish jobs gracefully before Control Plane stops the replica. That also works nicely for multiple replicas.

A couple of notes:

- We can't use the process name as regex because it's Ruby, not Sidekiq.

- We need to add a space after

sidekiq; otherwise, it sendsTSTPto thesidekiqswarmprocess as well, and for some reason, that doesn't work.

So with ^ and \s, we guarantee it's sent only to worker processes.

pkill -TSTP -f ^sidekiq\sTo do this:

- Navigate to the workload container for Sidekiq

- Click "Lifecycle" on the top menu

- Add the command and args below "Pre Stop Hook" and save

Setting Up a Liveness Probe

To set up a liveness probe on port 7433, see: https://github.com/arturictus/sidekiq_alive

Useful Links

- For best practices for the app's Dockerfile, see: https://lipanski.com/posts/dockerfile-ruby-best-practices

- For migrating from Heroku Postgres to RDS, see: https://pelle.io/posts/hetzner-rds-postgres